By Nick Butler

Recently a group of leaders from the public and nonprofit sectors joined us here at Boost for a workshop with award-winning management consultant Dave Snowden. This blog post summarises Prof. Snowden’s process for achieving change in complex systems, and gives you more resources if you want to explore further. It also takes a quick look at how Snowden’s work fits with Agile.

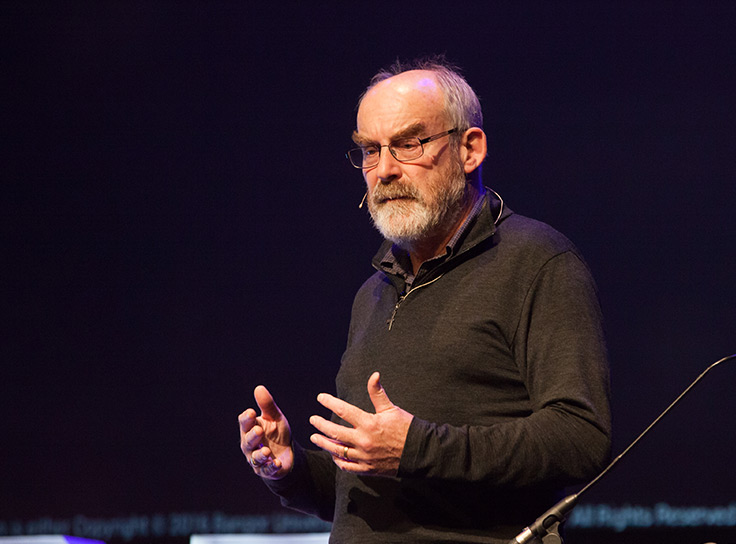

Dave Snowden is the director of the Centre for Applied Complexity at The University of Wales and founder of Cognitive Edge. Formerly he was Director of the Institution for Knowledge Management at IBM, where he developed the Cynefin framework, one of the first practical applications of complexity theory to management science.

Snowden warned us that his presentation would be something of a firehose of information and indeed it was. Concepts came firing in from evolutionary biology, neuroscience, management and many other disciplines, along with a revealing stream of anecdotes and examples. Any errors in attempting to channel this firehose are entirely my own.

Dave Snowden calls complexity the science of inherent uncertainty. “The other name for complexity is the science of common sense,” he says.

He describes three types of systems:

These don’t blend into each other. Systems flip between each type, in what Snowden calls a phase shift.

In a complex system, a small change in initial conditions can produce a wildly different result. The example often given is the famous “butterfly effect” in which the course of a tornado could be determined by the flap of a butterfly’s wings.

Dave Snowden says that managers tend to take an engineering approach. They treat complex systems as predictable mechanisms. And they make decisions based on what he calls “cases” — results from other systems. But they may well not get the same results because the context and initial conditions are different.

“Failure repeats but success rarely does,” he says.

He argues that there is a huge cost in creating an ordered system. Moreover, if you make your order too rigid you’ll collapse into chaos.

Snowden argues that we use the wrong model for judging probability and risk. We treat events as following a Gaussian distribution (bell curve) when the Pareto distribution (with its long tail) is more accurate. This means that low probability/high impact events (a.k.a. Black Swan events) are not as low probability as we think.

As a result you can’t avoid failure. He argues we should try to make a systems safe-to-fail not fail-safe.

One way to make a system safe-to-fail is to keep track of what’s happening in the system. Do this in the right way and you get early warnings and guidance on what kind of interventions will work. You can take advantage of changes and repurpose them for your benefit. You work with not against the complex system.

Snowden suggests a new approach to Design Thinking (see our summary of using Design Thinking in product discovery).

You need to learn about your system so you can spot issues and solutions early. To do this you plug directly into a smart sensor network, a.k.a. the people in the system. This lets you create a fast feedback loop.

What you have to do is:

Just because we see things doesn’t mean that we pay attention, and even if we pay attention we don’t always act.

He says the way we usually try to see what’s happening in a system don’t work. Things likes focus groups, surveys, questionnaires and polls don’t work because people give the answers they think you want.

He gives three terms for the approach he describes:

Here’s how he describes it working.

Get participants to tell a story, then get them to interpret this story. The interpretations provide objective data and can be visualised to help you decide how to act. The stories provide context and detail and are useful for influencing decisions.

Imagine you want to find out how happy your staff are. The most common approach is to run a staff satisfaction survey. Snowden’s approach is to ask them to tell you a story. For example, you could ask “What story would you tell someone if they were thinking of joining your workgroup?”.

Snowden calls this a non-hypothesis-based question.

To get people to interpret the story, ask them to give the story a name. This, Snowden says, tells you a lot. Then give them six triangles. One of these might have each of the triangle’s points marked Altruistic, Assertive, Analytical. Notice that these are all positive qualities. Ask them to think about how decision-makers acted in the story. Then get them to decide where in the triangle they’d place this story. Because they don’t know what the right answer is, they can’t game the questions.

You can then ask yourself what you could do to create more stories that describe the sort of workplace you want to create.

Snowden describes this approach as a way of managing for exaptation. In evolutionary theory, exaptation takes place when features get used in ways for which they were not originally adapted. For instance, feathers initially evolved to keep dinosaurs warm, before they were co-opted for flight.

“Most human invention involves noticing side-effects then repurposing,” he says.

Using the SenseMaker® software that Snowden has developed you can then plot everyone’s responses. This lets you identify pathways to change.

In the chart above you’ll see that responses cluster heavily in the bottom corners and the top right corner. There’s also a smaller cluster in the centre.

In this case, imagine that the desired position is in the top right.

You could set incentives to move people from the lower clusters. But Snowden says explicit incentives de-motivate (as well as having unwanted side-effects). Instead you could nudge people. You could move people to an “adjacent possible”, what he calls a nearby weak attractor. In this case that would be the smaller cluster in the centre. If there is no adjacent possible, think about what can you do to create one. Then you can nudge them again into the top right.

To come up with these nudges, ask yourself what you could do to change the stories in one of the lower clusters so they’re more like the ones in the adjacent possible. You can click on the individual data points to dig into the story and better understand what’s going on. This means that advocacy is baked into the evidence.

Snowden recommends you do lots of small initiatives. If one fails, you can fix it fast. Go with your best guesses using what he calls abductive reasoning — choose the simplest solution, which you can then test.

Snowden argues that because nudges are about direction not goal they’re not gamable.

Snowden gave the example of a project they did with the Australian Air Force on demotivated staff. They had a problem with officers leaving early.

They asked, “You’re a grandparent. Your grandchild says they want to join the Air Force. What would you tell them about your experience?”

The result showed a correlation between willingness to leave the Air Force and Lean Six Sigma being brought in.

The leader of the Air Force argued this was because Six Sigma hadn’t been explained properly. But when they clicked into the stories the first response to the question “what will you tell your grandchildren?” was a one-liner: “I’ll shoot them first and they’ll be grateful.” The Air Force head thought this would be a disgruntled young officer but when they clicked on the demographics it showed the story was from a warrant officer with thirty years service.

The next story had been given the name, “Why do we have to shit under the trees?” and it explained that the Six Sigma process meant a unit didn’t get their mobile latrine in time because they haven’t filled out the forms properly.

“The head of the Air Force said, ‘we’ve taken Six Sigma too far’,” Snowden reports.

Working in an Agile development agency, I found that a lot of what Dave Snowden said sounded familiar. Take the Agiles values for example. These favour:

Individuals and interactions over processes and tools

Working software over comprehensive documentation

Customer collaboration over contract negotiation

Responding to change over following a plan

You can see these reflected in Snowden’s process, with its focus on individual stories, baked-in advocacy, small initiatives and fast feedback loops.

Perhaps the similarity is no surprise since this process aims to achieve the same goals that led us to adopt Agile. We’re both working to help people make a positive and lasting impact in a complex world.

The Cynefin framework — a video introduction in under ten minutes

Complexity, citizen engagement in a Post-Social Media time | TEDx University of Nicosia — YouTube

How leaders change culture through small actions — YouTube

Managing complexity by reducing batch size — Boost blog

A big thank you to Mark Anderson, the Director of the Cynefin Centre New Zealand, for helping make these workshops possible.